JavaScript is an integral part of most websites. Our detailed JavaScript SEO guide will cover the essential elements of JS, including how Google interacts with JavaScript based sites and the best practices for optimising your site for better visibility and performance.

WHAT IS JAVASCRIPT?

JavaScript is a programming language – a fundamental tool in modern web development, powering dynamic and interactive website features. Known for its speed, it enhances website performance, making it an essential choice for creating seamless user experiences. Developers value its ease of maintenance, efficient debugging capabilities, and compatibility with other programming languages, making it a versatile option for front-end development. Along with hypertext markup language (HTML) and cascading style sheets (CSS) it’s one of the most widely used programming languages.

JavaScript is powerful and creates websites that users love – dynamic, interactive, and memorable. However, from an SEO standpoint, JavaScript brings a range of complexities. This is where the real challenge begins.

HOW DOES JAVASCRIPT AFFECT SEO?

User experience and site speed – Too much JavaScript can slow your site down. If your site doesn’t load fast enough, users will bounce straight to your competitors. Bad news for your business, and even worse for your search rankings, where user experience and speed are make-or-break factors.

Internal linking – JavaScript can dynamically generate links, which might seem great for user experience, but search engines often struggle to crawl them. This can compromise your site’s internal linking structure, making key pages harder to find. However, it’s possible to use internal links with JavaScript, but they must include the href attribute within an <a> tag.

Images – Lazy loading images is a technique to boost performance by only loading images when they’re needed, instead of all at once. It’s great for speeding up page load times, but if done wrong, search engines might miss indexing those images altogether, costing you valuable visibility in image search.

Metadata – Some sites rely on JavaScript to load crucial metadata like titles and meta descriptions. The problem? If it doesn’t render properly, search engines might miss it entirely leaving your page poorly represented in the SERPs.

Content accessibility – Content accessibility is key for both users and search engines. To make sure your content is clear and indexable, it needs to load properly, have a crawlable URL, and follow the same SEO best practices you’d apply to HTML sites. Make sure your URLs are unique, descriptive and easy for search engines to understand.

Rendering – JavaScript can have a big impact on how a webpage loads and renders. If critical content isn’t included in the initial HTML, it might stay unindexed for some time. Render-blocking JavaScript can also slow down your page’s load time, holding back its full performance potential. Google advises removing or deferring any JavaScript that delays the loading of above-the-fold content to ensure a faster, smoother user experience and better indexing.

HOW DOES GOOGLE CRAWL AND INDEX JAVASCRIPT?

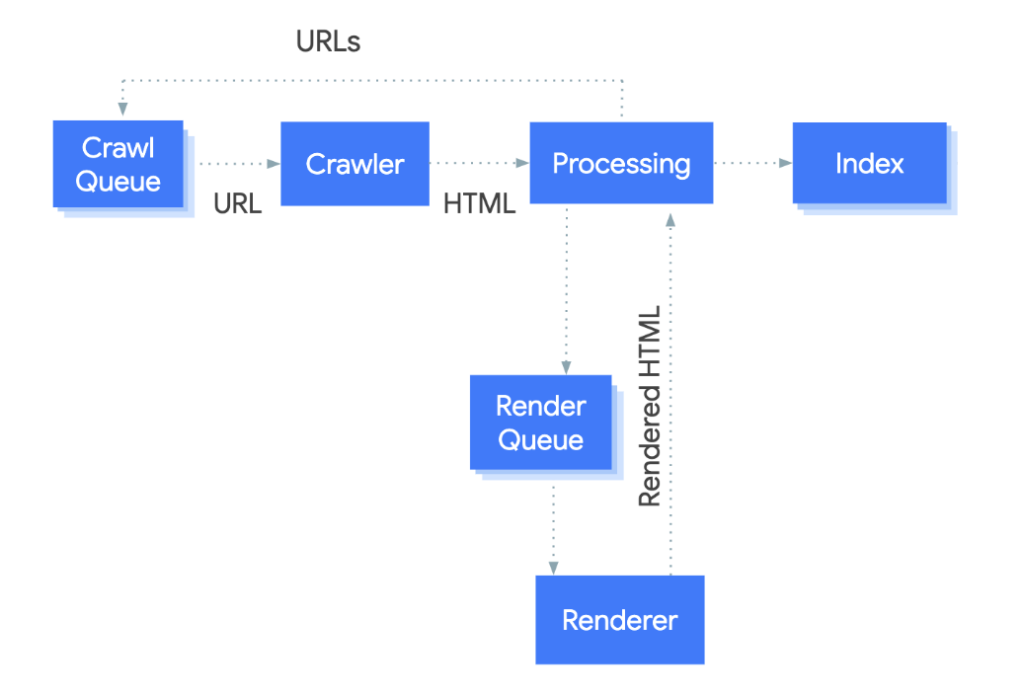

Google processes JavaScript websites in three main phases:

- Crawling

- Rendering

- Indexing

The diagram above shows how Googlebot handles indexing.

First, pages are added to a queue for crawling and rendering. Googlebot fetches a URL from this queue and checks the robots.txt file to see if it’s allowed to crawl the page.

Next, Googlebot processes the HTML and identifies links to other URLs, adding them to the crawling queue. When resources are available, a headless Chromium renders the page and executes any JavaScript. The rendered HTML is then used to index the page.

Here’s how JavaScript rendering works – URLs are added to the crawl queue, processed by the crawler, and then queued for rendering. Once rendered, the URL is indexed. But with Google, for example, there’s a two-step process. First, it crawls and indexes the raw HTML of a page. Then, it returns to render and index the JavaScript-powered content. This second phase can take hours or even days after the initial crawl. So, if your main content is loaded via JavaScript, there might be a significant delay before it’s indexed.

JAVASCRIPT RENDERING OPTIONS

If your website relies on JavaScript, it’s essential to determine how the JavaScript code will be rendered. This decision significantly impacts both your site’s performance and the user experience.

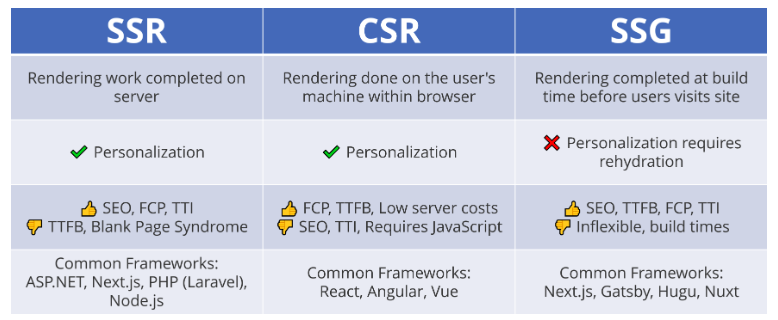

There are three primary JavaScript rendering methods to consider:

- Server Side Rendering (SSR)

- Client Side Rendering (CSR)

- Static Site Generation (SSG)

Each approach comes with its own advantages and limitations.

Source: Thomas Desmond

Server Side Rendering. Server-Side Rendering. With SSR, the server handles the heavy lifting by processing the JavaScript and sending a fully-rendered page to the browser. This means the browser doesn’t need to process the JavaScript itself.

The upside? Faster initial page loads and better crawlability and indexing – your content is immediately accessible to search engines. The downside? SSR can put more strain on your server, which may impact performance under heavy traffic.

Client Side Rendering. With CSR, JavaScript runs in the user’s browser rather than on the server. When a user visits a page, the server delivers the JavaScript files, which the browser processes to display the content.

The benefits? Reduced server load and, in some cases, better overall performance. But there’s a trade-off. Pages can take longer to load initially, and CSR can create challenges for crawling and indexing potentially hurting your site’s search engine visibility.

Static Site Generation. With SSG, websites are delivered as pre-built static files – exactly as they’re stored on the server, with no server-side processing when a user visits. Rendering happens ahead of time, on the server, before any user requests are made.

The main benefits include fast load speeds and strong SEO potential. However, implementing dynamic functionality can be challenging and content management on static sites can be more complex.

HOW TO CHECK PAGE RENDERING?

To see how your pages are rendered, use the URL Inspection tool in Google Search Console. The URL inspection tool lets you examine a specific URL on your site to see how Google perceives it. This tool provides crucial insights into crawling and indexing, as well as details from Google’s index, such as successful indexing status.

JAVASCRIPT SEO BEST PRACTICES

- Indexable and unique URLs

Each page on your site should have a unique URL to help Google crawl and understand what your pages are about.

- Ensure easy rendering for search engines.

Make it easy for search engines to understand your pages without needing to render them. Search engines rely on the initial HTML response to interpret your page’s content and follow your crawling and indexing rules. If they can’t, you’ll struggle to achieve competitive rankings.

- Canonical and meta robots tags should be part of your initial HTML response.

Implementing these directives via JavaScript can cause delays, as Google only discovers them after crawling and rendering your pages. This not only wastes valuable crawl budget but also creates unnecessary confusion, which is why this approach should be avoided.

- Prioritise critical content and navigational elements

If you can’t avoid having your pages rendered by search engines, ensure that key content and navigational elements are included in the initial HTML. This way, even if rendering takes time, the most important elements are already indexed.

- Allow search engines access JavaScript resources

If your page uses JavaScript to load content, make sure those JavaScript resources are crawlable and not blocked in your robots.txt file.

- Optimised metadata

Each page on your site should have a clear focus and target – descriptive and optimised titles and meta descriptions. This helps both search engines and users understand the page’s content, making it easier for users to decide if it matches their search intent. A key point to flag with JavaScript is the potential mismatch between HTML and Java Script elements, such as differing titles or canonical tags. These inconsistencies can lead to confusion for search engines and should be avoided.

JavaScript can present challenges for SEO, but these can be minimised with a clear understanding and thorough audit of potential problem areas outlined in this guide. If your site is experiencing crawling or indexing issues related to JavaScript, or if you’d like to learn more about JavaScript SEO, get in touch – we’d be happy to help.